In today’s fast-paced digital landscape, companies increasingly turn to Generative AI (GenAI) bots to enhance customer service, streamline operations, and drive innovation. While the deployment of these sophisticated systems offers unparalleled advantages, it also brings significant challenges. One of the most pressing concerns is the accuracy and reliability of the responses generated by AI bots. Ensuring that these outputs are factually correct and compliant with organizational policies is vital, underscoring the need for a robust fact-checking layer.

Imagine a scenario where a customer queries a chatbot for critical financial advice and receives inaccurate information. The consequences could be dire, ranging from tarnished reputations to significant financial loss. This highlights the importance of a stringent fact-checking mechanism that acts as a safety net, mitigating risks and enhancing trust in AI-generated responses. By leveraging a fact-checking layer, businesses can ensure that their GenAI bots consistently deliver reliable, accurate, and compliant information.

Automating Risk Identification

A comprehensive AI risk management layer is crucial for automating risk identification for AI-generated outputs, significantly reducing the need for manual oversight. Efficient methodologies such as Retrieval-Augmented Generation (RAG) can be employed to retrieve factual data that verifies the accuracy of AI responses. This not only enhances the reliability of information but also ensures alignment with organizational policies and standards, making these AI systems safer and more efficient.

Real-World Applications: Insurance Claim Adjustments

For example, in the insurance industry, ensuring that claim assessment bots provide accurate and compliant information is critical. By cross-referencing bot-generated responses against a comprehensive knowledge graph, companies can significantly reduce client disputes and enhance trust. This approach ensures that claim adjustments are consistently aligned with internal policies, providing clients with reliable, policy-adherent information. Leveraging such advanced solutions not only streamlines the claim adjustment process but also fortifies the relationship between insurers and clients through transparent and accurate communication.

A Comprehensive Workflow for Reducing Risks

A structured workflow can make a substantial difference for enterprises looking to safely deploy AI solutions. Here’s an example designed to address common concerns:

- Upload Data:The process begins with uploading client data into three distinct categories: Product Intelligence, Policies, and Internal Employee Insights. These data categories form the backbone for risk analysis.

- Data Transformation: Once uploaded, the data undergoes processing using advanced techniques like Propositional Chunking. Each propositional chunk and sentence is then converted into embeddings and stored in a database (e.g., using VECTOR type) for efficient retrieval.

- Risk Analysis: The GenAI-generated outputs are sent to an API for risk evaluation. The outputs are vectorized and compared against the existing client data store using dot product search to find relevant matches. This cross-referencing helps identify inconsistencies or hallucinations by matching the vectorized outputs with the stored data.

- Contextual Verification: To ensure thorough risk analysis, the top three search results are referenced against the originally uploaded content. Additional context—such as two sentences before and after the relevant chunk—is retrieved to enrich the RAG process, enhancing the reliability and relevance of AI outputs.

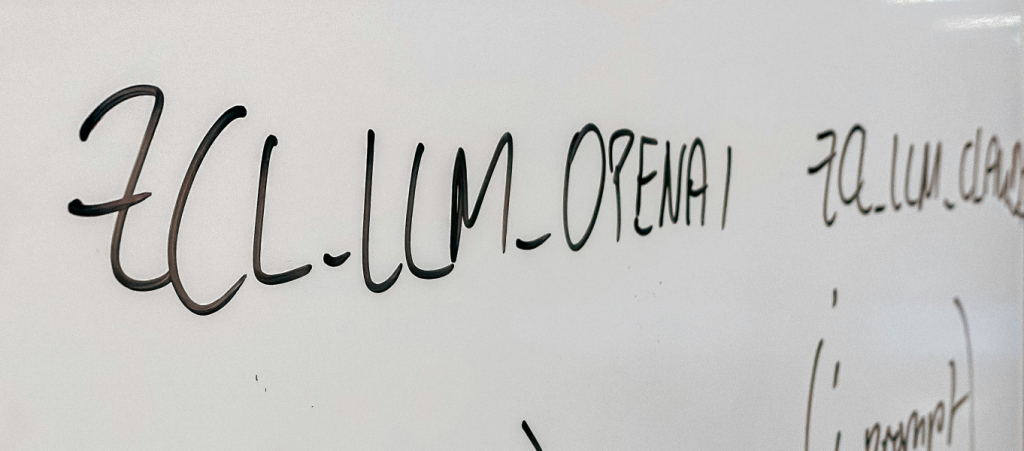

- Comprehensive Report: The enriched data prompts generate a comprehensive report. The default system could leverage widely used AI models, but it is straightforward to reroute these prompts to proprietary or custom open-source language models for tailored needs. The risk analysis report typically includes an overall risk level, detailed individual risks, their respective levels, original references, and actionable mitigation ideas.

By following this structured workflow, companies can significantly reduce the risks associated with GenAI hallucinations. This not only ensures compliance and accuracy but also builds trust and confidence in AI applications.

The Imperative of Fact-Checking Layers

Given the stakes involved, businesses must not overlook the necessity of a fact-checking layer. As Michael Schmidt, Chief Technology Officer at DataRobot Inc., aptly noted, “Almost every customer I’ve talked to has built some sort of bot internally but hasn’t been able to put it into production or has struggled with it because they don’t trust the outputs enough.” This lack of trust often stems from the absence of effective risk management and fact-checking mechanisms.

In conclusion, the imperative for a reliable fact-checking layer in AI systems is clear. By integrating robust risk management solutions, businesses can mitigate risks, build trust, ensure compliance, and harness the full potential of their GenAI bots. As AI continues to evolve, so too must our strategies for managing and verifying its outputs. Let’s embrace comprehensive solutions that prioritize accuracy and compliance, paving the way for safer and more reliable AI applications.

Leave a Reply